Publications

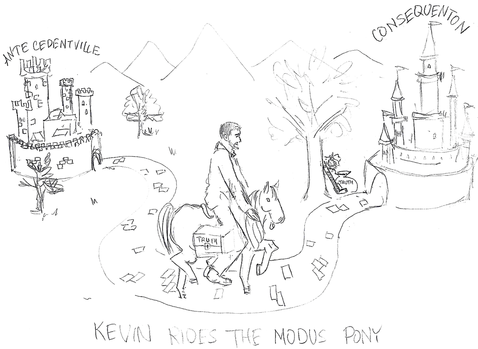

Mental capacities for perceiving, remembering, thinking, and planning involve the processing of structured mental representations. A compositional semantics of such representations would explain how the content of any given representation is determined by the contents of its constituents and their mode of combination. While many have argued that semantic theories of mental representations would have broad value for understanding the mind, there have been few attempts to develop such theories in a systematic and empirically constrained way. This paper contributes to that end by developing a semantics for a ‘fragment’ of our mental representational system: the visual system’s representations of the bounding contours of objects. At least three distinct kinds of composition are involved in such representations: ‘concatenation’, ‘feature composition’, and ‘contour composition’. I sketch the constraints on and semantics of each of these. This account has three principal payoffs. First, it models a working framework for compositionally ascribing structure and content to perceptual representations, while highlighting core kinds of evidence that bear on such ascriptions. Second, it shows how a compositional semantics of perception can be compatible with holistic, or Gestalt, phenomena, which are often taken to show that the whole percept is ‘other than the sum of its parts’. Finally, the account illuminates the format of a key type of perceptual representation, bringing out the ways in which contour representations exhibit domain-specific form of the sort that is typical of structured icons such as diagrams and maps, in contrast to typical discursive representations of logic and language.

It is commonly assumed that images, whether in the world or in the head, do not have a privileged analysis into constituent parts. They are thought to lack the sort of syntactic structure necessary for representing complex contents and entering into sophisticated patterns of inference. I reject this assumption. “Image grammars” are models in computer vision that articulate systematic principles governing the form and content of images. These models are empirically credible and can be construed as literal grammars for images. Images can have rich syntactic structure, though of a markedly different form than sentences in language.

Perception is a central means by which we come to represent and be aware of particulars in the world. I argue that an adequate account of perception must distinguish between what one perceives and what one's perceptual experience is of or about. Through capacities for visual completion, one can be visually aware of particular parts of a scene that one nevertheless does not see. Seeing corresponds to a basic, but not exhaustive, way in which one can be visually aware of an item. I discuss how the relation between seeing and visual awareness should be explicated within a representational account of the mind. Visual awareness of an item involves a primitive kind of reference: one is visually aware of an item when one's visual perceptual state succeeds in referring to that particular item and functions to represent it accurately. Seeing, by contrast, requires more than successful visual reference. Seeing depends additionally on meta-semantic facts about how visual reference happens to be fixed. The notions of seeing and of visual reference are both indispensable to an account of perception, but they are to be characterized at different levels of representational explanation.

People have expectations about how colors map to concepts in visualizations, and they are better at interpreting visualizations that match their expectations. Traditionally, studies on these expectations ( inferred mappings ) distinguished distinct factors relevant for visualizations of categorical vs. continuous information. Studies on categorical information focused on direct associations (e.g., mangos are associated with yellows) whereas studies on continuous information focused on relational associations (e.g., darker colors map to larger quantities; dark-is-more bias). We unite these two areas within a single framework of assignment inference. Assignment inference is the process by which people infer mappings between perceptual features and concepts represented in encoding systems. Observers infer globally optimal assignments by maximizing the “merit,” or “goodness,” of each possible assignment. Previous work on assignment inference focused on visualizations of categorical information. We extend this approach to visualizations of continuous data by (a) broadening the notion of merit to include relational associations and (b) developing a method for combining multiple (sometimes conflicting) sources of merit to predict people's inferred mappings. We developed and tested our model on data from experiments in which participants interpreted colormap data visualizations, representing fictitious data about environmental concepts (sunshine, shade, wild fire, ocean water, glacial ice). We found both direct and relational associations contribute independently to inferred mappings. These results can be used to optimize visualization design to facilitate visual communication.

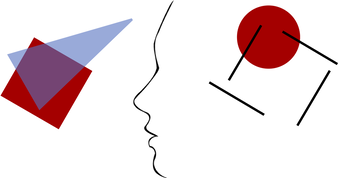

When interpreting the meanings of visual features in information visualizations, observers have expectations about how visual features map onto concepts (inferred mappings.) In this study, we examined whether aspects of inferred mappings that have been previously identified for colormap data visualizations generalize to a different type of visualization, Venn diagrams. Venn diagrams offer an interesting test case because empirical evidence about the nature of inferred mappings for colormaps suggests that established conventions for Venn diagrams are counterintuitive. Venn diagrams represent classes using overlapping circles and express logical relationships between those classes by shading out regions to encode the concept of non-existence, or none. We propose that people do not simply expect shading to signify non-existence, but rather they expect regions that appear as holes to signify non-existence (the hole hypothesis.) The appearance of a hole depends on perceptual properties in the diagram in relation to its background. Across three experiments, results supported the hole hypothesis, underscoring the importance of configural processing for interpreting the meanings of visual features in information visualizations.

An ongoing philosophical discussion concerns how various types of mental states fall within broad representational genera—for example, whether perceptual states are "iconic" or "sentential," "analog" or "digital," and so on. Here, I examine the grounds for making much more specific claims about how mental states are structured from constituent parts. For example, the state I am in when I perceive the shape of a mountain ridge may have as constituent parts my representations of the shapes of each peak and saddle of the ridge. More specific structural claims of this sort are a guide to how mental states fall within broader representational kinds. Moreover, these claims have significant implications of their own about semantic, functional, and epistemic features of our mental lives. But what are the conditions on a mental state's having one type of constituent structure rather than another? Drawing on explanatory strategies in vision science, I argue that, other things being equal, the constituent structure of a mental state determines what I call its distributional properties--namely, how mental states of that type can, cannot, or must co-occur with other mental states in a given system. Distributional properties depend critically on, and are informative about, the underlying structures of mental states, they abstract in important ways from aspects of how mental states are processed, and they can yield significant insights into the variegation of psychological capacities.

We can perceive things, in many respects, as they really are. Nonetheless, our perception of the world is perspectival. You can correctly see a coin as circular from most angles. Yet the coin looks different when slanted than when head-on, and there is some respect in which the slanted coin looks similar to a head-on ellipse. Many hold that perception is perspectival because we perceive certain properties that correspond to the "looks" of things. I argue that this view is misguided. I consider the two standard versions of this view. What I call the pluralist approach fails to give a unified account of the perspectival character of perception, while what I call the perspectival properties approach violates central commitments of contemporary psychology. I propose instead that perception is perspectival because of the way perceptual states are structured from their parts.

We do not just perceive a table as having parts—a tabletop and legs. When you perceive the table, the state you are in itself has parts—states of perceiving the sizes, shapes, and colors of the tabletop and of the legs. These perceptual states themselves have parts, though they are not so easily identified. The idea that perceptual states have parts that can combine and recombine in rule-governed ways is foundational to contemporary psychology, and over the last century perceptual psychologists have closely investigated the ways in which our perceptual states are structured. In my dissertation, I argue that we can resolve longstanding problems in the philosophy of mind by attending to the parts of perception and how they combine. In doing so, I clarify and regiment the conception of combinatorial structure that is implicit in psychology.

Committee: Tyler Burge (chair), Sam Cumming, Gabriel Greenberg, Phil Kellman

Committee: Tyler Burge (chair), Sam Cumming, Gabriel Greenberg, Phil Kellman

In progress

Compositionality in Perception: A Framework [draft]

|

Perception involves the processing of content or information about the world. In what form is this content represented? I argue that perception is widely compositional. The perceptual system represents many stimulus features (including shape, orientation, and motion) in terms of arrangements of other features (shape parts, slant and tilt, grouped and residual motion vectors). But compositionality can take a variety of forms. The ways in which perceptual representations compose are markedly different from the ways in which sentences or thoughts are thought to be composed. Throughout, I suggest that the thesis that perception is compositional is not itself a concrete hypothesis with specific predictions, but rather it affords a framework for developing and evaluating empirical hypotheses about the nature of perceptual representations. The question is not just whether perception is compositional, but how. Answering this latter question can provide fundamental insights into the place of perception within the mind.

|

The Spatial Unity of Perception

The brain encodes spatial content with respect to different "frames of reference.'" What counts as "left" with respect to an eye-centered reference frame might count as "right" or "straight-ahead" in a head- or object-centered frame. How is it, then, that we seem to perceive things within a common, unified space? Is there just one ultimate reference frame of experience? Or is spatial unity something of an illusion, arising from the skill with which we coordinate between disjoint reference frames? I argue that neither of these possibilities is adequate to explaining the spatial unity of perception. Many of our experiences are "multiplex," in the sense that they incorporate multiple reference frames within one integrated representation. I argue that the spatial unity of perception depends on our ability to integrate multiple reference frames into structured representations of spatial relationships.

Gazing at the Interface

The mind represents things in many different codes, or formats. How do representations in one format manage to causally interact with representations in another, in a semantically coherent fashion? This is the "interface problem." I will argue, first, that interfaces are common and relatively well-understood. I survey how information is reformatted throughout the visual hierarchy and in the goal-directed control of gaze and reach. Second, I argue that these interfaces share a common feature: they incorporate inductive biases. When, e.g., representations of 2D surfaces are transformed into representations of 3D shapes, content is not just re-represented in a new format, rather it is selectively modified and amplified. Interfaces rarely involve pure translation. Finally, I raise a puzzle for the interface between perception and conceptual thought. On many accounts, conceptualization involves selecting some perceptual content and transforming it into a co-extensional content suitable for thought. I pose a dilemma: either conceptualization is like the other formats, in which case concepts may amplify or modify the perceptual contents on which they are based; or conceptualization is special, in which case we want an explanation of why.

Logical Form and Ecological Form

Mental states are complex. The state I am in when I see a maple leaf consists in having a representation of the leaf’s orange color and a representation of its articulated shape. My representation of the leaf’s shape is itself complex, consisting in representations of the peaks, valleys, and sides that make up the leaf’s outline. Focusing on vision, I argue that perceptual representations have domain-specific form, or "ecological form." There are constraints on how perceptual representations can combine, such that the very structure of a complex perceptual state––the mode of composition of its representational parts––imposes substantive commitments about the things represented. Perceptual representations are structurally limited, roughly, to represent circumstances that would plausibly occur in our normal environment. The way shape representations and color representations can and cannot combine reflects regularities in how shapes and colors are co-instantiated in our normal environments. The way representations of contour segments can and cannot combine into representations of whole outline shapes reflects regularities in how contours actually do and do not operate in our environment. I discuss how the ecological form of perceptual states may contribute to perceptual warrant and its relation to the “logical form” of thought and language.

Public philosophy

(April 11, 2019) "Do You Compute?" Aeon [link]

‘The brain is a computer’ – this claim is as central to our scientific understanding of the mind as it is baffling to anyone who hears it. We are either told that this claim is just a metaphor or that it is in fact a precise, well-understood hypothesis. But it’s neither. We have clear reasons to think that it’s literally true that the brain is a computer, yet we don’t have any clear understanding of what this means. That’s a common story in science.

(May 12, 2017) "O Ant, Where Art Thou." The Daily Ant [link]

Do ants have any idea where they are and where home is at? When they go out into the world, do they grasp how far they have gone or what turns their path has taken? Desert ants (Cataglyphis) are able reliably to return to their homes, having left them in search of food. But the ability to reliably get back home does not imply that one has an idea, a mental representation or map, that specifies where in space home is located. Reflecting on why not helps us to get some purchase on a broader question: What sorts of abilities, or behaviors, indicate the presence of such mental representations? What abilities or behaviors indicate the presence of mind?

SSHRC Insight Development Grant: Forms of Mind

I am a recipient of a SSHRC Insight Development Grant to support my project, Forms of Mind. The purpose of this project is to explicate what I call the structural warrant that perception supplies to our beliefs, in virtue of the format or structure of perceptual representations. Perceptual representations have a compositional structure, so that representations of outline shapes, for example, are composed from representations of curved segments of an object's boundary. The constraints on how representational constituents can and cannot combine into more complex perceptual representations reflect certain implicit assumptions or biases concerning the perceived world. These structural constraints can contribute to the more reliable formation of accurate perceptual states and perceptual beliefs by ensuring that for the most part, any perceptual representation that is structurally possible is a representation of an ecologically plausible scene.